Domain Adaptation Language Model

The models outperformed the model without domain adaptation.

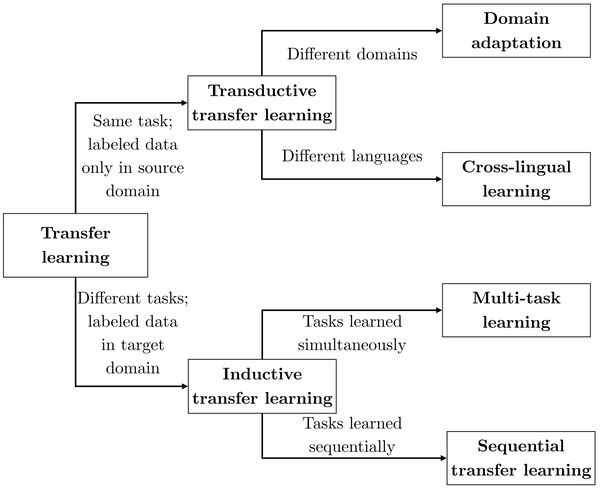

Domain adaptation language model. 1 language model domain adaptation the general objective of this project is to explore methods for adapting a language model trained on a source domain for which relatively large corpora are available to a somewhat similar target domain with limited data available. In particular the proposed model yielded an improvement of 4 3 4 2 points in em f1 in an unseen biomedical domain. Jonker department of intelligent systems delft university of technology. Using training data that is similar to the one in the target domain to improve model.

Fast gated neural domain adaptation. Language model domain adaptation via recurrent neural networks with domain shared and domain specific representations. Read full text view pdf. The idea is to come up with the best adaptation technique.

2018 april institute of electrical and electronics engineers inc 2018. We present section3 the pivot based language model pblm a domain adaptation model that a is aware of the structure of its input text. More broadly adapting a model to the local context can be seen as an instance of domain adaptation i e. Training recurrent neural network language models rnnlms requires a large amount of data which is difficult to collect for specific domains such as multiparty conversations.

Particularly the model is a sequential nn lstm that operates very similarly to lstm language models lstm lms. Unsupervised domain adaptation of language models for reading comprehension. To provide fine grained probability adaptation for each n grams we in this work propose three adaptation methods based on shared linear transformations. Language model lm adaptation is an active area in natural language processing and has been successfully applied to speech recognition and to many other applications.

N gram based linear regression interpolation and direct estimation. 2018 ieee international conference on acoustics speech and signal processing icassp 2018 proceedings. Language model domain adaptation via recurrent neural networks with domain shared and domain specific representations abstract. Recurrent neural network language model adaptation with curriculum learning yangyang shi martha larson catholijn m.

2 ruidan he hai ye2 ng hwee tou2 lidong bing3 rui yan1 1center for data science academy for advanced interdisciplinary studies peking university 2school of computing national university of singapore 3damo academy alibaba group flijuntao ruiyang pku edu cn fdcsyeh nghtg comp nus edu sg.